Cardano Founder Charles Hoskinson Concerned About AI Censorship

Charles Hoskinson, founder of Cardano, is concerned about the control big tech companies exert over…

The post Cardano Founder Charles Hoskinson Concerned About AI Censorship first appeared on Crypto Beat News.

Charles Hoskinson, founder of Cardano, is concerned about the control big tech companies exert over AI systems to censor information and train them with biases.

Table of contents

What is AI Censorship and How does it Work?

AI censorship involves modifying AI responses to adhere to specific guidelines, often to prevent the spread of harmful, offensive, or politically sensitive content. Companies like OpenAI, Google, and Meta have implemented mechanisms to filter responses on topics such as race, politics, and gender identity. For example, a Gizmodo study found that AI chatbots often avoid generating outputs on sensitive issues, highlighting the extent of AI censorship. AI systems are programmed to detect and filter out specific keywords or sentiments deemed inappropriate by regulatory standards.

AI censorship works through a combination of techniques and technologies designed to monitor, filter, and control the output of AI systems. Here’s a detailed look at how AI censorship technically functions:

1. Keyword Filtering

AI systems can be programmed to detect and filter out specific keywords or phrases. This involves using natural language processing (NLP) techniques to identify words that are flagged as inappropriate, harmful, or sensitive. When these keywords are detected, the system either modifies the response or refuses to generate it altogether.

2. Sentiment Analysis

AI censorship often employs sentiment analysis to evaluate the emotional tone of content. By analyzing whether the sentiment is positive, negative, or neutral, the system can determine if the content aligns with acceptable guidelines. Negative or inflammatory sentiments may trigger censorship mechanisms to alter or block the content.

3. Machine Learning Models

Machine learning models are trained on vast datasets to recognize patterns associated with censored content. These models can be supervised, where they are trained with labeled data (e.g., flagged and non-flagged content), or unsupervised, where they identify anomalies or new patterns on their own. The training data typically includes examples of both acceptable and unacceptable content to teach the AI what to censor.

4. Contextual Understanding

Advanced AI systems use contextual understanding to assess the meaning of phrases within larger contexts. This helps in distinguishing between benign uses of potentially harmful words and actual harmful content. Techniques like context-aware NLP and deep learning are used to analyze the surrounding text and ensure more accurate censorship.

5. Rule-Based Systems

In addition to machine learning, rule-based systems are implemented to enforce specific guidelines. These rules are predefined and can include various conditions under which content should be censored. For example, rules might specify that any content mentioning certain political figures or events in a derogatory manner should be censored.

6. Human Oversight

While AI systems perform the bulk of censorship automatically, human oversight is often involved to review borderline cases and improve the algorithms. Human moderators might review flagged content to determine if the AI’s decision was correct and provide feedback to refine the system.

7. Adaptive Algorithms

Adaptive algorithms continuously learn and update their censorship criteria based on new data and feedback. This dynamic adjustment helps the AI stay current with evolving language patterns and new types of sensitive content.

8. Real-Time Monitoring

AI systems can perform real-time monitoring of content across platforms. This involves scanning live inputs, such as social media posts or chat messages, and instantly applying censorship protocols if any restricted content is detected.

Criticism Towards AI Censorship

Not everybody is all that glad with this censorship. Hoskinson recently tweeted: “I continue to be concerned about the profound implications of AI censorship. They are losing utility over time due to ‘alignment’ training. This means certain knowledge is forbidden to every kid growing up, and that’s decided by a small group of people you’ve never met and can’t vote out of office.”

I continue to be concerned about the profound implications of AI censorship. They are losing utility over time due to “alignment” training . This means certain knowledge is forbidden to every kid growing up, and that’s decided by a small group of people you’ve never met and can’t… pic.twitter.com/oxgTJS2EM2

— Charles Hoskinson (@IOHK_Charles) June 30, 2024

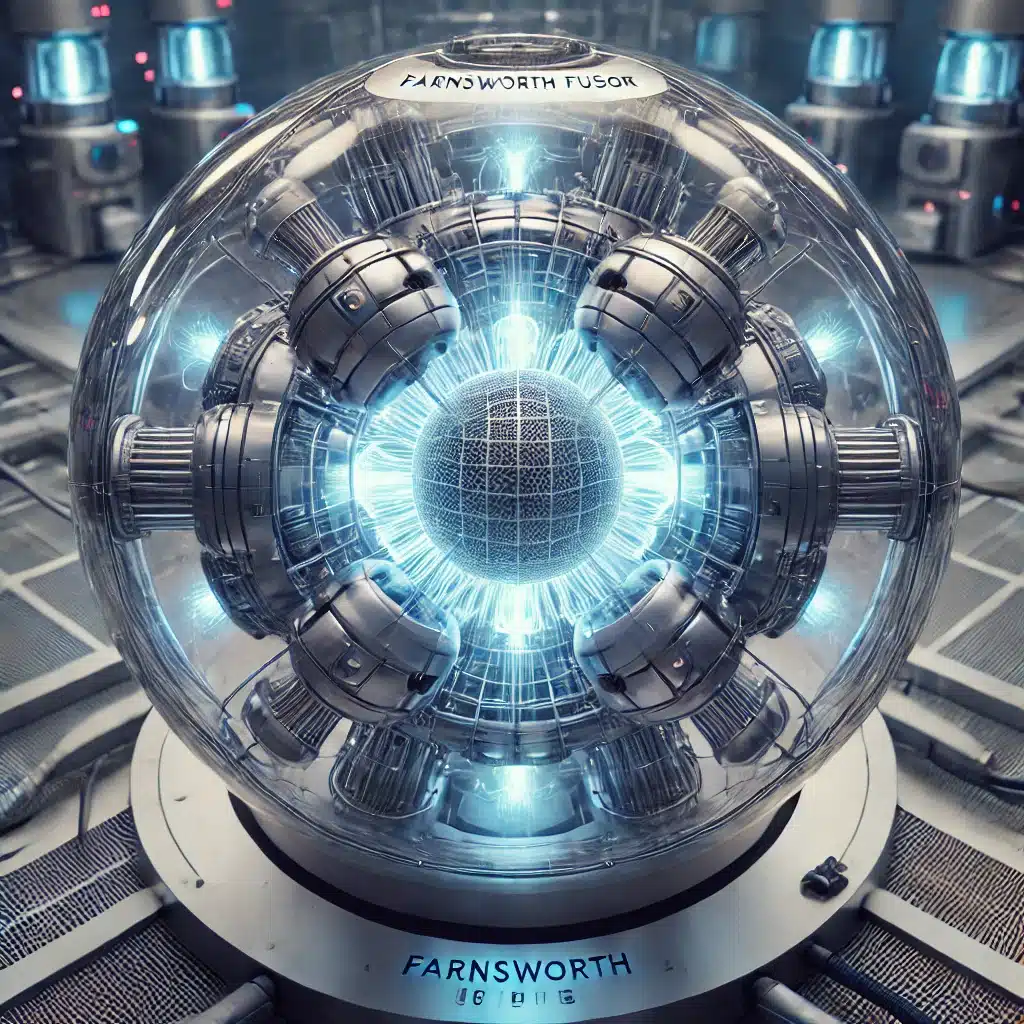

He conducted a test where he asked OpenAI’s ChatGPT and Anthropic’s Claude about building a Farnsworth fusor. Claude refused due to potential risks, while ChatGPT provided instructions. We also did the test and indeed, ChatGPT offered instructions alright while Claude simply refused.

Understanding the Farnsworth Fusor

The Farnsworth fusor, invented by Philo T. Farnsworth in the 1960s, is a device designed to achieve nuclear fusion. It consists of a spherical vacuum chamber with a central cathode grid, using an electric field to heat ions for fusion. High voltage creates a plasma, accelerating deuterium ions towards the center where fusion occurs. While mainly used for research and educational purposes, it demonstrates the principles of nuclear fusion.

Building and operating a Farnsworth fusor is not without a risk as you can read below.

- High Voltage: The fusor requires high-voltage power supplies, posing a risk of severe electric shock or burns.

- Radiation Exposure: Operating a fusor generates neutron radiation, which can be harmful without proper shielding and monitoring.

- Vacuum Hazards: The vacuum system can implode if not properly constructed, causing potential injury.

- Deuterium Handling: Deuterium gas is flammable and must be handled with care to avoid leaks and potential explosions.

- Heat: The device can generate significant heat, leading to burns or fire hazards.

About Charles Hoskinson and Cardano

Born on November 5, 1987, he studied mathematics and cryptography before co-founding Ethereum. He left Ethereum in 2014 due to differing visions and founded IOHK (Input Output Hong Kong) in 2015.

IOHK developed Cardano, a third-generation blockchain platform focusing on security and scalability for decentralized applications (dApps). Cardano distinguishes itself from most other blockchain platforms by its research-driven approach and the use of peer-reviewed academic research.

Hoskinson advocates for open-source software and decentralized governance, frequently engaging with the cryptocurrency community. His vision for Cardano includes addressing global challenges such as financial inclusion and governance through innovative blockchain solutions.

The post Cardano Founder Charles Hoskinson Concerned About AI Censorship first appeared on Crypto Beat News.

Since you’re here …

… we have a small favour to ask. More people are reading Side-Line Magazine than ever but advertising revenues across the media are falling fast. Unlike many news organisations, we haven’t put up a paywall – we want to keep our journalism as open as we can - and we refuse to add annoying advertising. So you can see why we need to ask for your help.

Side-Line’s independent journalism takes a lot of time, money and hard work to produce. But we do it because we want to push the artists we like and who are equally fighting to survive.

If everyone who reads our reporting, who likes it, helps fund it, our future would be much more secure. For as little as 5 US$, you can support Side-Line Magazine – and it only takes a minute. Thank you.

The donations are safely powered by Paypal.